How to Deal with the Server Load Balancing of a Big Web Page?

Today's high traffic websites must handle hundreds of thousands or even millions of simultaneous requests from users or customers and return the correct elements on the web page. They have to do all of that quickly and reliably. For the servers handling a given website, it's a test of whether they'll properly handle the traffic at a given moment so that the end user has uninterrupted and comfortable access to the service.

What is server load balancing, and why is it important for complex websites?

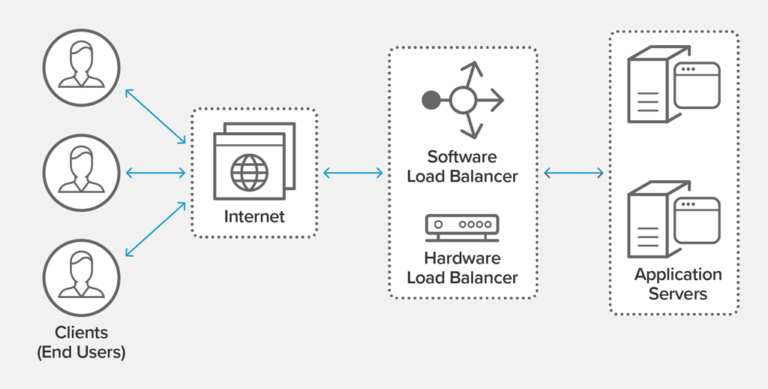

Within an IT infrastructure, the load balancer is a step ahead of the servers so that it can automatically distribute user requests between all servers ready to handle those requests in a way that maximizes speed and resource utilization, and ensures that no server is unavailable or in poor health (which could reduce performance). If one server fails, the load balancer redirects traffic to the other servers. As soon as a new server is added, the load balancer starts sending requests to it. The process of adding or removing servers is automated and carried out in a smart way, ensuring flexibility and cost savings.

Source: NGINX

Load balancer helps servers optimize resource utilization and prevents server overloads. This is very important for complex websites because all web page elements, i.e. text, graphics, animations, videos, or application data, must be transferred in the shortest possible time, and it'll be achievable thanks to optimally provided resources.

What is a big website from the DevOps perspective?

Internet technologies are changing rapidly over the years. The expectations of Internet users are changing in a similar way, and hence the attitude of companies - to the best possible advertising of their products and services. A static website has long since become a thing of the past, superseded by dynamic web pages full of regularly changing content that increase the server's load with queries in a less predictable manner than regular static files. Examples include, e.g. news websites, blogs, web applications, and online stores. Such web pages often have many dependencies, are connected to different APIs, contain internal and external integrations, and also refer to other systems. Complexity affects the performance and response time of a website to user queries. In addition, if a website becomes popular, it's characterized by high traffic increasing the load of the abovementioned factors.

Server load balancing of a complex page

From the infrastructure's point of view, complex websites have higher hosting requirements, as they have to handle heavy traffic, respond to irregular traffic spikes, but also provide a fallback solution in the event of an incident. There's also the issue of the entire solution's architecture. Assuming that the development elements, i.e. correct website optimization, running scripts, plugins, or file compression, are properly configured, there are still some DevOps elements that should go hand in hand. Complex websites may contain more "communicating vessels" than their standard simpler counterparts. An extensive website may, e.g. use several different types of databases at the same time, download a huge amount of data from the API within a single second, and manage many dependent networks.

Load balancer

Load balancers ensure that incoming access requests are allocated to a server that's able to handle them in an optimal manner. In addition, they enable cost-effective scaling of solutions and ensure the high availability of services.

The market offers a lot of possibilities to implement load balancing systems, ranging from free (e.g. HAProxy, Nginx) and commercial (Cisco, F5) solutions, through software solutions, to cloud options.

- When choosing a hardware load balancer, we should assume the manufacturer provides reliability as part of the whole package. On the one hand, there is a limitation to what the manufacturer offers, on the other hand, we get full support in the case of performance problems.

- The software load balancer doesn't allow for hardware management. Its functionalities focus on the software capabilities (e.g. the amount of vCPU, RAM, and the number of connections).

- The cloud load balancer is a combination of the previous options. Here, the cloud provider offers us a hardware load balancer, managed by them, or software – managed by us.

Major public cloud providers offer the load balancing service under their own names. Amazon provides AWS Elastic Load Balancer, Microsoft – Azure Load Balancer, and Google Cloud Platform – Cloud Load Balancing.

How to deal with server load balancing?

Over the years, the approach to website hosting has changed a lot. Even in the world of unlimited server possibilities, a single server is rarely used for this purpose. A load balancer has become one of the key elements in the design of the entire infrastructure. The proper implementation of a load balancer will ensure resource optimization, reduce workloads and increase productivity.

Choosing the type of load balancer

A load balancer is the first element of the infrastructure that the customer connects to and the only one that's visible to them, regardless of whether the system is in the hardware or software version. The hardware load balancer will use a physical server, which requires investing some money into this solution. The software load balancer may be installed on a virtual machine and may take the role of an application delivery controller. The cloud load balancer, on the other hand, is the most flexible choice.

Algorithm selection

The separation of the traffic going through the load balancer depends on the type of algorithm used. The four most well-known algorithms are Round Robin, Weighted Round Robin, Least Connections, and Weighted Least Connections.

- Round Robin is the simplest mechanism that creates a queue for incoming requests, and queries are allocated sequentially, depending on the availability of machines, with no specific priority.

- Weighted Round Robin is based on a weighting system, so traffic will be routed to the server with the higher weight assigned.

- The Least Connections algorithm directs the query to the server with the least active connections.

- Weighted Least Connections is based on the number of queries for each machine and the assigned weight.

Using a load balancer to handle HTTPS

Depending on the configuration, the load balancer can take over responsibility for HTTPS support. The domain certificate isn't on the application server then, but on the load balancer, which forwards queries to the application servers over HTTP while reducing the load on the back-end application servers.

Running health checks

An equally important function available in the load balancer are server health checks. There's no point in sending queries to hosts that are inaccessible or unreliable in terms of efficiency. Load balancers regularly perform server health checks in order to ensure that they can handle requests. If the server's response exceeds the threshold defined for correct responses several times, it’s assumed that the server is in an unhealthy condition. Then it’s decommissioned, and incoming inquiries aren’t redirected to it.

Server load balancing - summary

In recent years, load balancing has become the key element in infrastructure planning. Of course, the role of traffic distribution remains the most important one, but the load balancer also provides other possibilities, including the increasingly popular high availability. The correct configuration enables the proper provision of functions on websites and creates a positive image of the company, and ensures the comfort of use for end users.

Are you wondering which load balancer to choose and how to configure it correctly? Our DevOps specialists comprehensively deal with infrastructure, so after analyzing your needs, they’ll advise you and prepare the best solution.